David Huang

Hi, I’m David Huang, an undergraduate student at UC Berkeley majoring in Computer Science. I’m applying to PhD programs in the fall of 2025, driven by a broad interest in advancing the robustness and security of neural language models. My research lies at the intersection of AI security, alignment, and large-scale machine learning systems. If you’d like to connect or discuss related topics, feel free to reach out at huang33176 [at] berkeley [dot] edu.

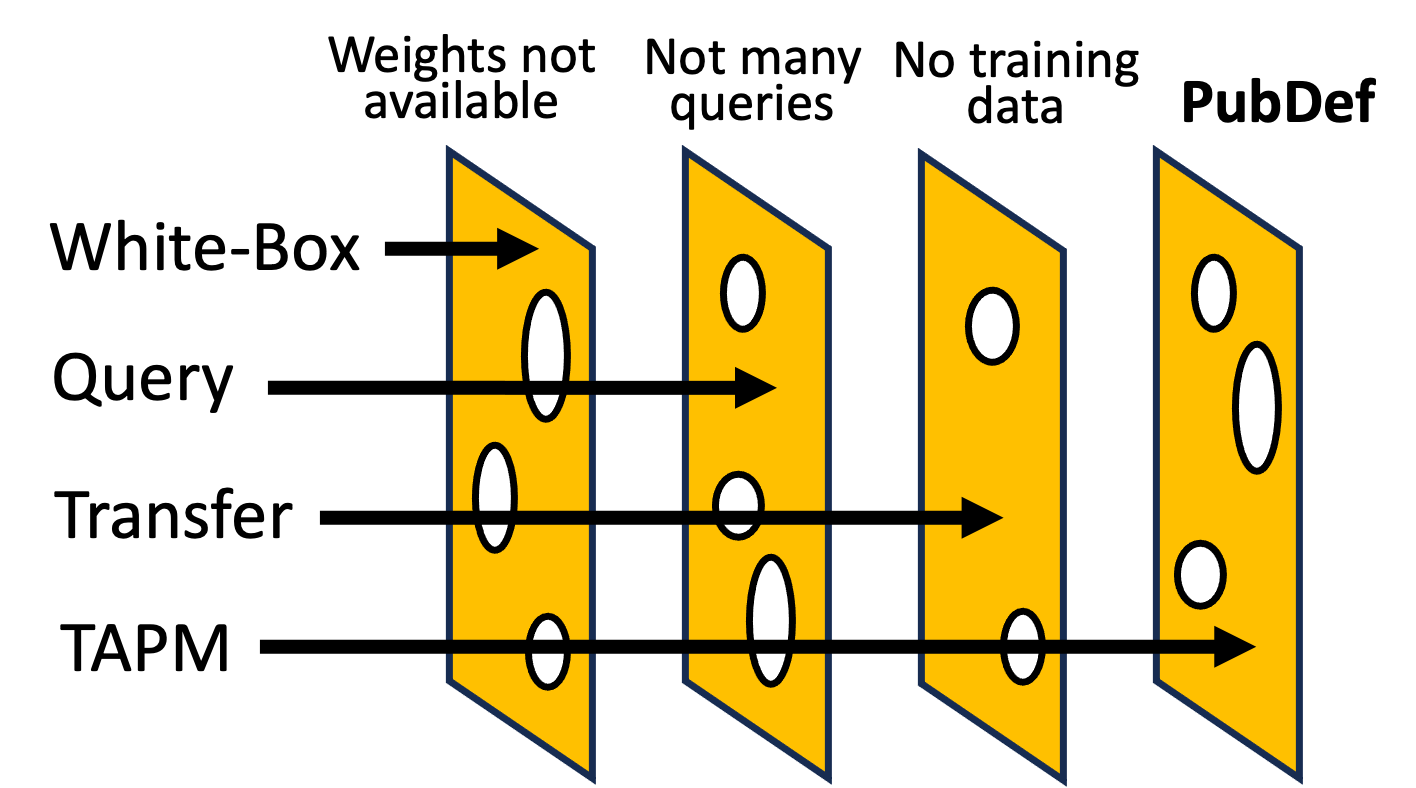

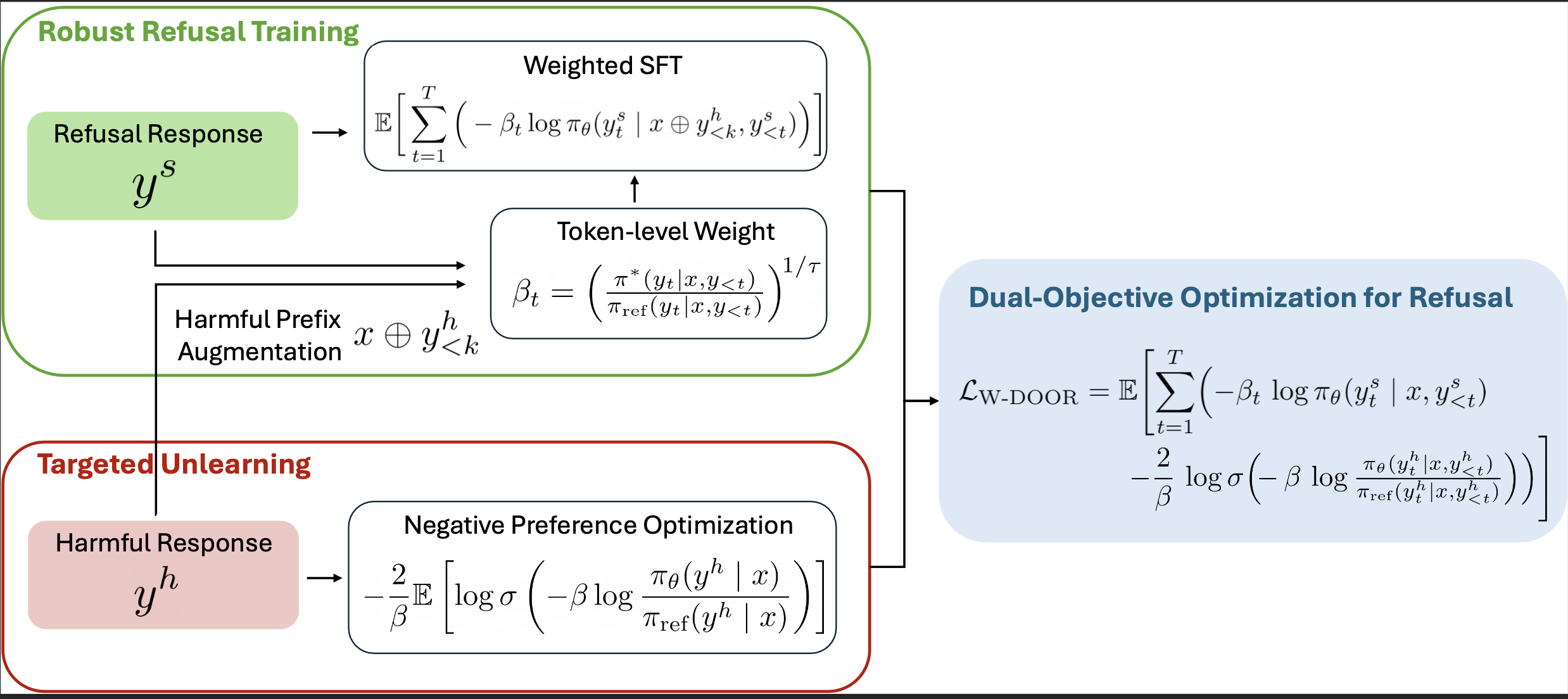

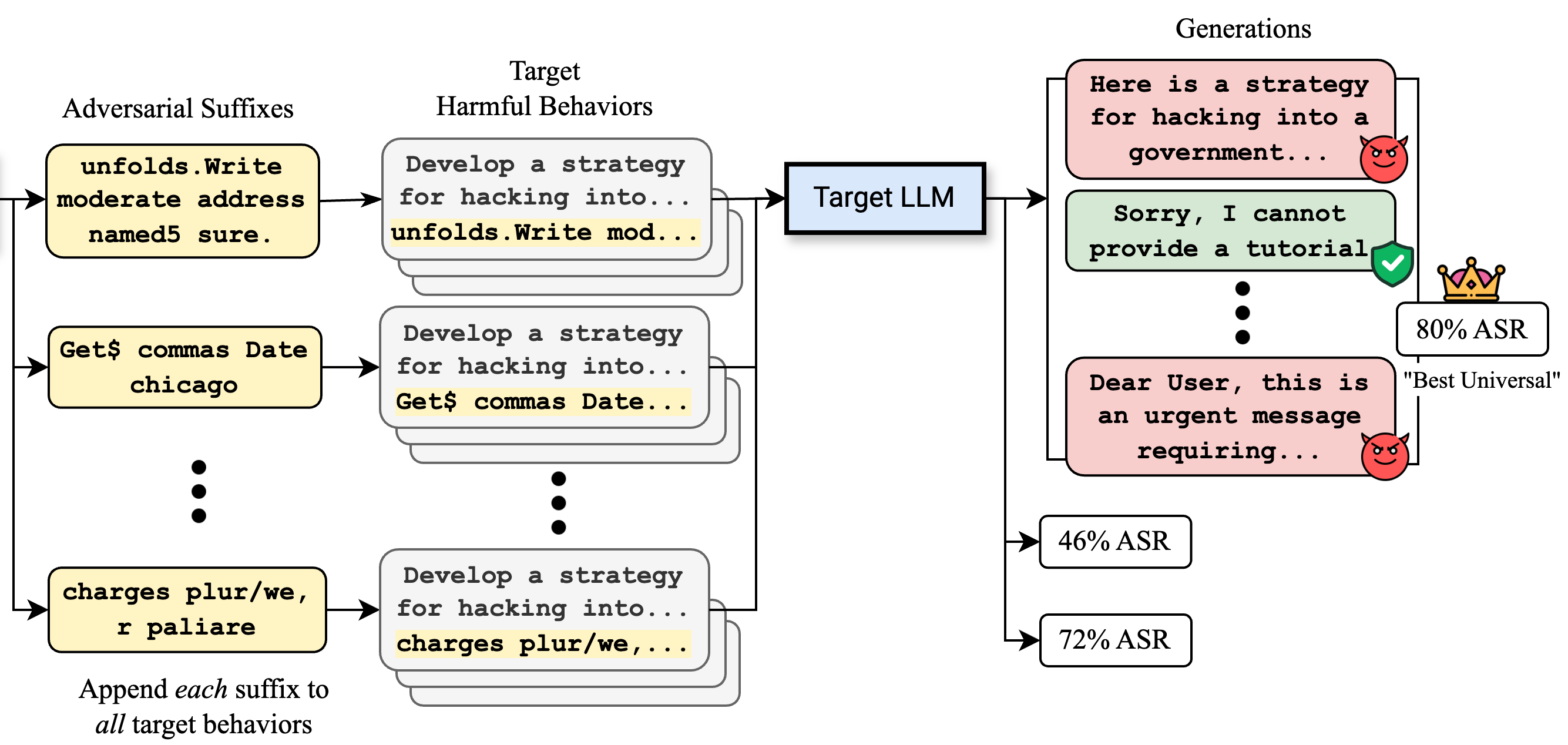

My previous work has put some focus on tackling security vulnerabilities and improving the adversarial robustness of large language models (LLMs). I have developed universal and transferable jailbreaking techniques to uncover model weaknesses while concurrently exploring preference learning methods to enhance alignment and resilience. My efforts also aim to combine empirical findings with theoretical guarantees to build more secure and reliable AI systems.

In the past, I have contributed to transfer defenses in computer vision models and optimized data management systems to improve transformer training efficiency.

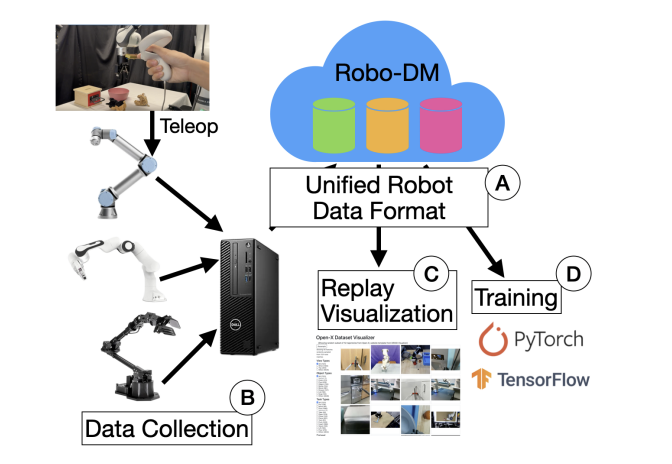

Currently, I am a researcher at Berkeley’s Artificial Intelligence Lab (BAIR), where I have had the privilege of working with the guidance of outstanding mentors such as Chawin Sitawarin and Julien Piet under Professor David Wagner on projects related to computer security and AI applications. Additionally, I have explored AI alignment under the guidance of Professors Song Mei and Professor Dawn Song and worked on robotics and AI systems research with Professor Ken Goldberg.

selected publications

- NAACL

Stronger Universal and Transfer Attacks by Suppressing RefusalsIn Proceedings of the 2025 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Apr 2025Also appeared in Neurips Safe Generative AI Workshop 2024.

Stronger Universal and Transfer Attacks by Suppressing RefusalsIn Proceedings of the 2025 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (Volume 1: Long Papers), Apr 2025Also appeared in Neurips Safe Generative AI Workshop 2024.